728x90

반응형

ResNet

Description

- ResNet의 특징:

- ResNet Architecture:

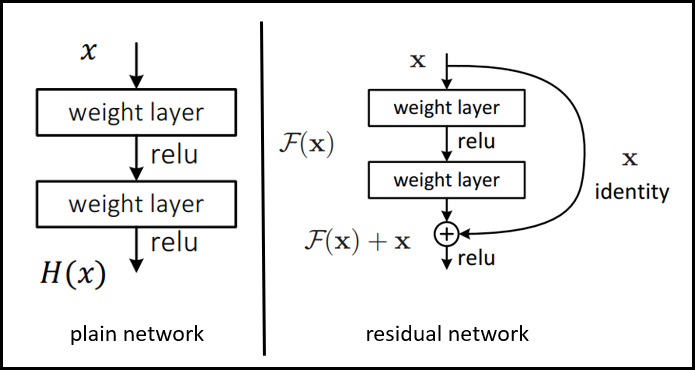

- ResNet - Residual Block

- Plain network: VGGNet based

- Convolution layer: 3x3, stried = 2

- Global Average Pooling applied

- FC layer(1000), softmax

- Residual network: Plain Network based

- shortcut connection 이 추가

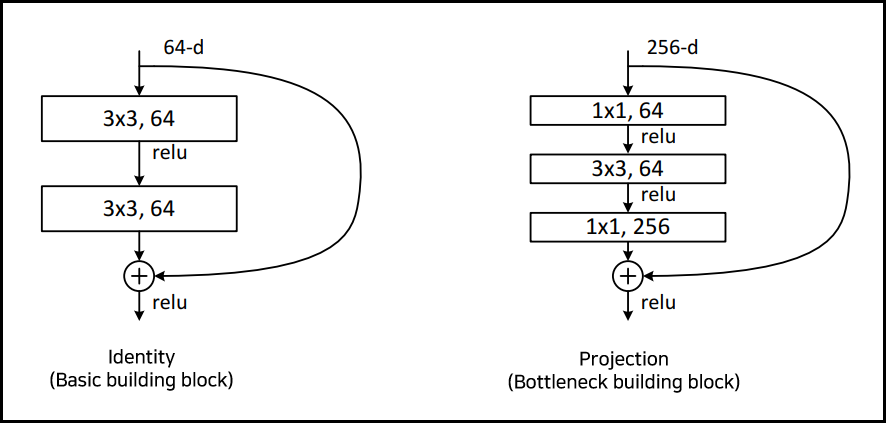

- 차원이 같을 때 : 직접 값을 전달해 준다

- 차원이 증가할 때 :

- zero padding으로 차원을 맞춰준다

- 1x1 Conv layer로 차원을 맞춰준다(VGG or GoogleNet)

- stride = 2

- Plain network: VGGNet based

- ResNet Projection(Bottleneck Building block):

- residual network 방식:

- Identity 방식

- 1x1 convolution 연산을 통해 차원을 스케일링 해주는 Projection 방식

- residual network 방식:

- Augmentation

- Input image shape = 224 x 224 x 3

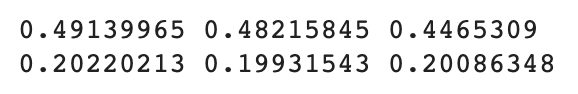

- Mean subtraction of RGB per channel

- Layer

- Batch Normalization 적용 (between Conv and Activation function)

- Dropout = None

- ResNet18, ResNet34 layer = Basic Building Block 사용

- ResNet50, ResNet101, ResNet 152 = Bottleneck Builiding Block 사용

- Max Pooling = 1개 (kernel size = 3x3, stride = 2)

- Global Average Pooling = 1개 (stride = 1)

- residual network에서 차원이 증가하는 경우 :

- zerro - padding 으로 차원 증가에 대응: 추가적으로 parameters가 없다.

- 1x1 convolution으로 차원 스케일링 해준 뒤 다시 원래 차원으로 스케일링 진행

- Hyper-parameter

- Optimizer = SGD -> Adam으로 변경

- Momentum = 0.9

- Weight Decay = 0.0001

- Batch size = 256

- learning rate = learning rate scheduler 사용(0.1에서 시작해서 x10씩 줄여나감) -> 0.001로 설정

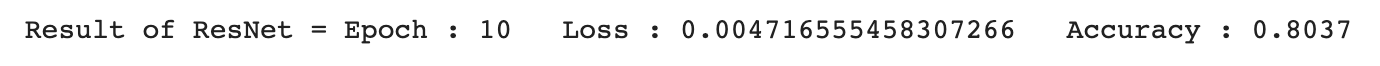

- Epoch = not mentioned -> 10 적용

- 60 × 104 iterations 만큼 학습 (논문)

- Dropout = None

- Fully Connected Layer(FC Layer):

- 1개의 512 channel

- Dataset:

- 논문 : ImageNet Large Scale Visual Recognition Challenge(ILSVRC)-2012, CIFAR-10

- 구현 : CIFAR-10

- CIFAR-10 Result(paper):

- ResNet은 기존의 plain network와는 다르게 layer가 깊어질수록 성능 향상이 나타남

- layer가 너무 깊은 경우 error 가 증가

- System Environment:

- Google Colab Pro

Reference

- https://roytravel.tistory.com/339

- https://inhovation97.tistory.com/46?category=920765

- https://github.com/weiaicunzai/pytorch-cifar100/blob/master/models/resnet.py

- https://github.com/pytorch/vision/blob/main/torchvision/models/resnet.py

- https://blackchopin.github.io/imagerecognition/ResNet/

- K. He, X. Zhang, S. Ren and J. Sun, "Deep Residual Learning for Image Recognition," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, pp. 770-778, doi: 10.1109/CVPR.2016.90.

https://arxiv.org/pdf/1512.03385.pdf

Load Modules

# Utils

import numpy as np

from tqdm import tqdm

import matplotlib.pyplot as plt

# Torch

import torch

import torch.nn as nn

from torch import Tensor

from typing import Optional

import torch.nn.functional as F

from torchsummary import summary

from torchvision import transforms

import torchvision

# TensorBoard

from torch.utils.tensorboard import SummaryWriter

! pip install tensorboardX

from tensorboardX import SummaryWriterSet Hyperparameters

batch_size = 128

num_epochs = 10

learning_rate = 0.001Load Data - CIFAR10

train_set = torchvision.datasets.CIFAR10(root='./cifar10', train=True, download=True, transform=transforms.ToTensor())

test_set = torchvision.datasets.CIFAR10(root='./cifar10', train=False, download=True, transform=transforms.ToTensor())Mean subtraction of RGB per channel

train_meanRGB = [np.mean(x.numpy(), axis=(1,2)) for x, _ in train_set]

train_stdRGB = [np.std(x.numpy(), axis=(1,2)) for x, _ in train_set]

train_meanR = np.mean([m[0] for m in train_meanRGB])

train_meanG = np.mean([m[1] for m in train_meanRGB])

train_meanB = np.mean([m[2] for m in train_meanRGB])

train_stdR = np.mean([s[0] for s in train_stdRGB])

train_stdG = np.mean([s[1] for s in train_stdRGB])

train_stdB = np.mean([s[2] for s in train_stdRGB])

print(train_meanR, train_meanG, train_meanB)

print(train_stdR, train_stdG, train_stdB)

Define the image transformation for data

train_transformer = transforms.Compose([transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize(mean=[train_meanR, train_meanG, train_meanB], std=[train_stdR, train_stdG, train_stdB])])

train_set.transform = train_transformer

test_set.transform = train_transformerDefine DataLoader

trainloader = torch.utils.data.DataLoader(train_set, batch_size=batch_size, shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(test_set, batch_size=batch_size, shuffle=True, num_workers=2)Created ResNet Model

- BottleNeck

class BottleNeck(nn.Module):

expansion = 4

def __init__(self, in_channels, out_channels, stride=1):

super().__init__()

self.residual_function = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Conv2d(out_channels, out_channels, stride=stride, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Conv2d(out_channels, out_channels * BottleNeck.expansion, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels * BottleNeck.expansion),

)

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels * BottleNeck.expansion:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels * BottleNeck.expansion, stride=stride, kernel_size=1, bias=False),

nn.BatchNorm2d(out_channels * BottleNeck.expansion)

)

def forward(self, x):

return nn.ReLU(inplace=True)(self.residual_function(x) + self.shortcut(x))- ResNet50

class ResNet(nn.Module):

def __init__(self, block, num_classes=10):

super().__init__()

self.in_channels = 64

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.conv2_x = self._make_layer(block, 64, 3, 1)

self.conv3_x = self._make_layer(block, 128, 4, 2)

self.conv4_x = self._make_layer(block, 256, 6, 2)

self.conv5_x = self._make_layer(block, 512, 3, 2)

self.avg_pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self, block, out_channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.in_channels, out_channels, stride))

self.in_channels = out_channels * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.conv2_x(x)

x = self.conv3_x(x)

x = self.conv4_x(x)

x = self.conv5_x(x)

x = self.avg_pool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return xSet Device and Model

use_cuda = torch.cuda.is_available()

print("use_cuda : ", use_cuda)

FloatTensor = torch.cuda.FloatTensor if use_cuda else torch.FloatTensor

device = torch.device("cuda:0" if use_cuda else "cpu")

net = ResNet(BottleNeck).to(device)

X = torch.randn(size=(3,224,224)).type(FloatTensor)

print(summary(net, (3,224,224)))

Loss and Optimizer

use_cuda = torch.cuda.is_available()

print("use_cuda : ", use_cuda)

device = torch.device("cuda:0" if use_cuda else "cpu")

model = ResNet(BottleNeck).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)Training Loop

writer = SummaryWriter("./resnet/tensorboard")

def train(model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data,target) in enumerate(train_loader):

target = target.type(torch.LongTensor)

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

if (batch_idx + 1) % 30 == 0:

print(f"{batch_idx*len(data)}/{len(train_loader.dataset)}")

def test(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += criterion(output, target).item()

writer.add_scalar("Test Loss", test_loss, epoch)

pred = output.argmax(1)

correct += float((pred == target).sum())

writer.add_scalar("Test Accuracy", correct, epoch)

test_loss /= len(test_loader.dataset)

correct /= len(test_loader.dataset)

return test_loss, correct

writer.close()Per-Epoch Activity

for epoch in tqdm(range(1, num_epochs + 1)):

train(model, device, trainloader, optimizer, epoch)

test_loss, test_accuracy = test(model, device, testloader)

writer.add_scalar("Test Loss", test_loss, epoch)

writer.add_scalar("Test Accuracy", test_accuracy, epoch)

print(f"Processing Result = Epoch : {epoch} Loss : {test_loss} Accuracy : {test_accuracy}")

writer.close()

Result

print(f" Result of ResNet = Epoch : {epoch} Loss : {test_loss} Accuracy : {test_accuracy}")

Visualization

%load_ext tensorboard

%tensorboard --logdir=./resnet/tensorboard --port=6006728x90

반응형

'📂 프로젝트 > ◾ PAPERS' 카테고리의 다른 글

| [GoogleNet] 논문 리뷰 및 구현 (코드 설명 포함) (0) | 2023.02.08 |

|---|---|

| [AlexNet] 논문 리뷰 및 구현 (코드 설명 포함) (2) | 2023.02.08 |

댓글